Two very smart folks, Mark Rozenzweig and Martin Ravallion have reviews of Poor Economics in the latest Journal of Economic Literature (thanks to Abhi and

Andrea for the papers). Obviously self-recommending when smart economists review smart economists. But there does seem to be a bit of a rehashing.

Martin's biggest score is the "where the hell is China?" line. Some of the other criticisms are a bit weaker.

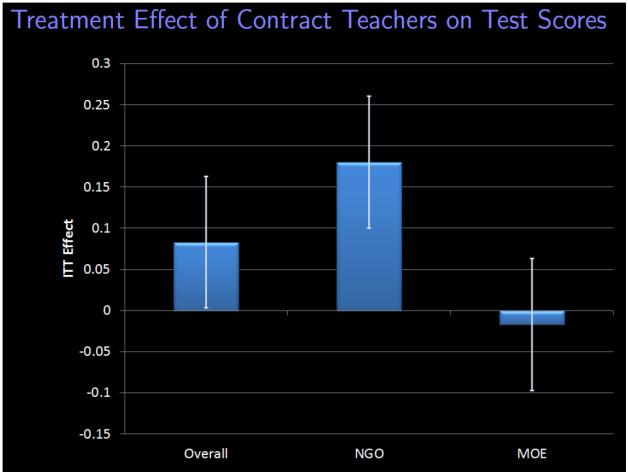

Another likely bias in the learning process is that J-PAL’s researchers have evidently worked far more with nongovernmental organizations (NGOs) than governments.

Which is a bit of a cheap shot, and a bit innacurrate. Researchers have worked with whoever will let them experiment, which yes initially was NGOs but is increasingly governments - see

Peru's Quipu commission, Chile's Compass commission, the

teaching assistant initiative in Ghana, working with the

planning Ministry in South Africa, experimenting with

police service reform in Rajasthan, even

Britain's Behavioural Insights Unit.

Then

how confident can we really be that poor people all over the world will radically change their health-seeking behaviors with a modest subsidy, based on an experiment in one town in Rajasthan, which establishes that lower prices for vaccination result in higher demand?

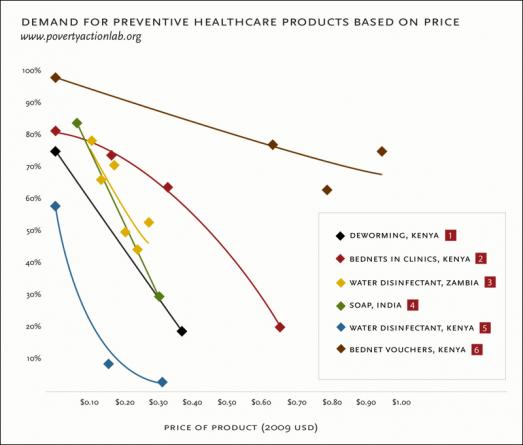

Ummmm... well thats why J-PALs policy recommendation for health pricing is based on 6 different studies....

Mark scores his biggest hit in the final footnote on the last page of his article;

Also absent is a discussion of the standard but major problem in the implementation of any programs or transfers targeted to the poor and that do not really spur development—moral hazard.

"Moral hazard" works at both the individual and national government level. If you get aid, you are probably less likely to work hard. The critical question is the magnitude of this effect. I think that on balance the positive value of effective aid outweighs the moral hazard, but that is more of a feeling than an evidence-based proposition. This is also one of the key points made by aid critics Bauer/Easterly/Moyo. Not necessarily that aid doesn't work, as Banerjee/Duflo would like to present their argument, but that even if aid does work, the negative moral hazard effect might outweigh the positive. I haven't seen this argument really addressed at all.

The other serious and neglected criticism for me is on general equilibrium, raised by

Daron Acemoglu in the Journal of Economic Perspectives. What if you measure a positive impact of a program on earnings, but those are coming

at the expense of others? A training program that increases earnings might just be equipping some individuals to out-compete others in the market, rather than necessarily increasing aggregate productivity, in which case scaling the program ain't gonna work.

So maybe I've missed them - but has anyone seen a convincing rebuttal to the moral hazard and general equilibrium critiques of micro aid project impact evaluation?

-----

Update: A couple of things I missed in my haste - Abhi points out that Rosenzweig makes good points on the sometimes tiny effect sizes lauded in Poor Economics (e.g. where "15% increase" translates to something like 2 weeks schooling or 50 cents), and that RCTs can focus our attention away from the big (important?) questions, but I felt this criticism is pretty well rehearsed.

Update 2: Also Ravallion loses points for his cliched title: "Fighting Poverty One Experiment at a Time". "x one y at a time" is a boring, tired, tired, catchphrase.

Update 3: Ravallion

gains points for coining "regressionistas."